The fast growth of image generation has made creating high-quality visuals easier than ever. However, for companies, this ease often comes with hidden risks. Unlike traditional machine learning models, Generative AI systems, particularly image generation models, fail in subtle ways: a hand with six fingers, a “professional setting” that lacks diversity, or a brand mascot placed in an unsafe context. While automated metrics provide a baseline, they cannot judge intent or cultural fit. As models move toward deployment, high-volume human evaluation becomes essential to ensure outputs are safe, accurate, and usable.

As image generation systems move from R&D into enterprise deployment, these risks compound. Ad-hoc human evaluation is often the first part of the pipeline to strain under volume, leading to inconsistent judgments, noisy data, and signals that fail to meaningfully improve model performance. In response, leading teams are shifting towards structured, human-in-the-loop evaluation systems built around calibration, disagreement handling, and continuous quality control.

To manage this at an enterprise level, companies must move beyond subjective “star ratings” and toward a structured, systems-led approach. This requires focusing on three core pillars: precise calibration, scalable disagreement resolution, and technology-driven quality control.

In high-volume environments, the risk is not a single bad image but the accumulation of small, unnoticed failures. When evaluation systems lack structure, errors propagate silently across datasets, creating false confidence in model readiness. This is why image generation programs fail not at experimentation, but at deployment, where consistency, repeatability, and governance matter most.

Why Calibration Breaks First at High Volume

When evaluation expands from a handful of researchers to hundreds of reviewers distributed across regions and time zones, subjectivity becomes the dominant risk. Without alignment, even experienced reviewers interpret quality differently, producing data that looks consistent on the surface but collapses under scrutiny.

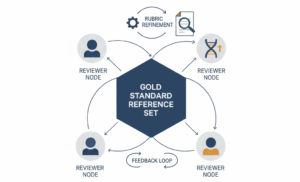

High-performing teams treat calibration as a continuous operational process rather than a one-time training step. Common mechanisms include:

- Gold Standard Benchmarking: Reviewers are tested against pre-vetted images with definitive scores before touching live data. This ensures every evaluator works from the same logic.

- Iterative Rubric Refinement: Calibration often reveals where a rubric is too vague. High-performing teams use these sessions to sharpen definitions, for example, exactly what constitutes “high” vs. “medium” photorealism.

- Continuous Sync Loops: Teams hold regular sessions to discuss “edge cases” to ensure the entire group’s internal compass remains aligned as the model’s outputs evolve.

When calibration is handled informally or assumed to “self-correct,” reviewer drift sets in quietly. Models may appear to improve on paper while accumulating hidden inconsistencies that surface only during deployment, when reliability matters most.

Disagreement Resolution Without Slowing Delivery

In visual evaluation, disagreement is inevitable. Two experts can reasonably differ on whether a generated face appears natural or whether a scene feels authentic. The problem is not disagreement itself, but how it is handled.

Teams that scale successfully design explicit workflows to resolve differences without slowing production. A common approach involves:

- Triple-Pass Review: For high-priority tasks, three experts score the same output independently to establish a clear consensus.

- Expert Adjudication: When scores diverge, the system flags the image for a senior lead to make the final call.

- Feedback Integration: Adjudication shouldn’t just settle the tie; the rationale is documented and shared with the reviewers. This turns “hard cases” into a training signal that improves accuracy over time.

When no such process exists, teams often default to averaging scores. This smooths over variance but discards the most valuable information in the dataset: the edge cases where the model is struggling, and human judgment matters most.

Quality Control for High-Volume Evaluation

As evaluation volume grows, manual spot checks and static QA processes fail to keep pace. Quality control must operate continuously, with system-level visibility into reviewer behavior and output consistency.

Mature evaluation programs rely on multiple, reinforcing controls to maintain reliability:

- Inter-Annotator Agreement (IAA) Tracking: Monitoring agreement scores in real-time to catch reviewer fatigue or rubric ambiguity early.

- Automated Quality Backstops: Using AI to catch “low-hanging fruit”, like explicit content or obvious anatomical errors, allowing human experts to focus on complex nuance.

- Domain-Matched Reviewer Pools: Ensuring that structural engineering renders are checked by engineers, and cultural symbols are checked by diverse, regional experts.

In image generation workflows specifically, quality control extends beyond visual correctness. Mature evaluation programs assess prompt, image fidelity, aesthetic coherence, brand and style adherence, and rights-related risks such as logo misuse or stylistic overfitting as part of comprehensive image generation evaluation workflows. These dimensions require calibrated human judgment and multi-axis rubrics that automated checks cannot reliably enforce, particularly in multi-turn generation and image-editing workflows.

Platforms like Ango Hub support this kind of production-grade oversight, enabling teams to maintain reliability without introducing friction as evaluation volume increases. This system-level approach reflects how iMerit operationalizes human evaluation, treating quality not as a manual checkpoint, but as a continuously monitored production system.

The gap between ad-hoc image evaluation and production-grade evaluation shows up in how teams handle calibration, disagreement, and quality control.

| Evaluation Component | Ad-Hoc/Crowdsourced Approach | Production-Grade Approach |

|---|---|---|

| Calibration | One-time instructions; high subjectivity | Continuous “Gold Standard” loops; expert alignment |

| Disagreement | Averaging scores or ignoring outliers. | Tiered adjudication; disagreement as a data signal |

| Quality Control | Manual spot-checks; high latency | Real-time IAA tracking via Ango Hub; automated backstops |

| Expertise | Generalist reviewers; “vibe-based” scoring | Domain-matched experts (Engineering, Medical, Cultural) |

Conclusion: Evaluation as Infrastructure

In today’s AI landscape, the ability to generate an image is no longer a differentiator. Reliability is. Human evaluation has moved beyond being a final check. It is increasingly treated as infrastructure, shaping how models are refined, how risks are surfaced early, and how deployment readiness is established.

This shift is already visible in how mature teams operate. At iMerit, evaluation programs are built around calibration, structured disagreement handling, and production-grade quality control, reflecting where the industry is headed rather than where it has been. As regulatory scrutiny increases and brand trust becomes harder to maintain, these systems provide not just better models, but clearer evidence of readiness for real-world use.

Is your human evaluation pipeline ready for production? Talk to our experts about operationalizing your image generation workflows.