Modern autonomous systems rely on high-definition (HD) maps as their single source of truth for localization and path planning. But when real-world changes, such as updated lane markings or seasonal shifts in a warehouse, occur, the simulation environments fall out of sync with physical reality. When that gap persists, the same perception failures repeat, no matter how much the model improves.

The solution to this drift is a closed-loop mapping pipeline. It is an architecture in which every real-world perception failure automatically triggers a map update, feeding fresh data back into the HD maps simulation.

That continuous, automated map maintenance ensures the digital map always evolves with the real world and speeds up the safe deployment of autonomous vehicles (AVs), robotics, and Physical AI.

In this article, we will go over the mechanisms for detecting these discrepancies and the reconstruction of 3D geometry from sensor logs. Plus, the role of expert validation in maintaining ground-truth reliability at scale.

Telemetry and Triggering: Identifying Map-Perception Discrepancies

Perception failures often surface first as subtle inconsistencies between what the vehicle observes and what the map expects. The first step in updating high-definition maps is to catch when a vehicle’s perceptions disagree with the existing map data using automated failure triggers. These triggers look for high-confidence detections that do not match any feature in the HD maps.

For example, if a vehicle’s camera identifies a Stop sign with 99% confidence, but the HD map indicates the road is a throughway with no such sign, the system flags a discrepancy.

Triggers are categorized by the nature of the conflict:

- Presence Conflict: Sensors detect an object (a new divider) that is not in the HD map.

- Absence Conflict: The map indicates a feature (a lane marking) that the sensors cannot find despite clear visibility.

- Attribute Conflict: Sensors detect a change in a map property, such as a changed speed limit or a new turn restriction.

Using automated triggers, the system can selectively log data from the moment of failure. And this is far more efficient than recording entire driving sessions, as it isolates the safety-critical edge cases that define the long tail of autonomous driving.

Alongside event triggers, localization techniques provide another check. Advanced vehicles run SLAM (Simultaneous Localization and Mapping) and visual odometry in the background, comparing their estimated position with the HD map’s expected position.

The system can pinpoint areas where the physical world no longer aligns with the geometry of the HD maps simulation by analyzing localization residuals (mismatches between expected and observed positions).

Once an error is detected, data pruning extracts only the relevant onboard sensor data (LiDAR, camera, radar, pose) from the failure event. This smart filtering focuses on the moment-of-truth frames to minimize noise and enable faster reconstruction and analysis without wading through hours of irrelevant data.

The Extraction Pipeline: Reconstructing Real-World Geometry from Logs

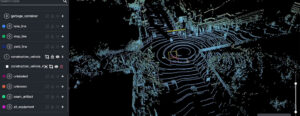

After collecting the critical sensor data, the next step is to recreate the scene that caused the error. This requires combining different sensor data and matching them in time and space. Since AVs have multiple cameras, LiDARs, and radars, each with its own clock and orientation. Techniques like timestamp synchronization and calibration matrices help tie all sensor readings to the same reference frame.

Using aligned data, the system uses advanced reconstruction tools to build high-fidelity digital twins for the HD maps simulation. Recent developments, such as Neural Radiance Fields (NeRFs) and 3D Gaussian Splatting, use machine learning to turn scattered sensor logs into photorealistic 3D images.

While NeRFs provide dense detail, 3D Gaussian Splatting represents the scene as millions of tiny 3D Gaussian points, which can be rendered much faster than NeRF, while still capturing fine detail. It lets teams quickly generate ground-truth models of the exact location where a failure happened.

Once the scene’s digital twin is created, the final step is converting it into a structured semantic map. It involves identifying changes to infrastructure, such as new bollards, shifted lane markings, or modified docking zones, and updating the vector layer in the HD maps simulation.

Semantic extraction uses vision models to classify every element in the reconstructed scene. The result is a collection of high-precision map elements:

- Vectorized Lanes: Centerlines and boundaries with centimeter precision.

- Traffic Primitives: Exact 3D locations of stop lines, crosswalks, and signals.

- Physical Barriers: 3D meshes of curbs, walls, and other non-drivable areas.

By extracting these layers from real-world logs, the system ensures that the HD maps simulation accurately reflects the ground truth of the current physical world.

Differential Updating: Enhancing the HD Maps Simulation Environment

Once the new map data is extracted, it must be integrated into the existing map infrastructure. But updating a global map for every small change is inefficient. Instead, engineers use spatial version control to patch only the affected tile, like a missing crosswalk line, for efficient and incremental changes.

A common problem in HD maps simulation is that virtual environments are often too clean. To make the simulation useful for training perception systems, developers use real-world perception failures to reintroduce realistic noise into the environment.

Real-world noise includes:

- Lighting and Weather: Replicating the exact sun glare, shadows, or foggy conditions that led to the original failure.

- Occlusions: Simulating how large vehicles or roadside foliage might hide critical map elements.

- Sensor Corruptions: Injecting artifacts such as LiDAR beam missing or camera color quantization into the virtual sensors.

With these noises (edge cases), the simulation becomes a realistic test environment that challenges the perception system in the same way the real world did. And it helps the system learns to recognize it under difficult, real-world conditions.

Throughout these updates, it’s important to maintain the map’s logical consistency. Patches must ensure that the map’s underlying navigational graph remains logically sound. If a change is made, connections to adjacent roads and navigational rules must be verified so that path planning functions correctly and prevents dead ends or orphaned segments.

Scaling Validation: Regression Testing in the Updated Environment

After updating the map, the next step is to confirm that the fix resolves the issue without introducing new errors. This starts with automated scenario generation, where the updated map fragment is replayed in simulation under multiple controlled variations.

These permutations may include changes in vehicle speed, traffic flow, lighting, or weather conditions. The goal is to ensure the original perception failure no longer appears across realistic variations of the same scenario.

Next, teams close the metric loop by measuring perception performance before and after the update. In a successful update, the perception system should show zero variance in errors when tested against the reconstructed virtual twin. Key metrics such as detection accuracy, false positives, false negatives, and localization residuals are tracked to confirm that the issue has been fully resolved.

Before pushing changes to production, the new map tiles are often validated using shadow mode. In this setup, vehicles run the updated HD map internally while continuing to operate using the production map.

It lets teams observe how the updated map performs in real-world conditions without affecting live behavior. If shadow mode results consistently match or improve upon production performance, the update is approved for full deployment.

Turn Every Perception Failure into HD Map Intelligence with iMerit

Implementing a closed-loop HD maps simulation pipeline at scale requires strong data operations to ingest, validate, and structure the massive volumes of sensor logs flowing from deployed fleets. Plus a human-in-the-loop to ensure ground-truth accuracy. iMerit provides the specialized expertise and infrastructure to power this closed-loop process.

Expert-in-the-Loop Validation

iMerit uses a specialized workforce and QA workflows to review and correct complex perception and HD map inconsistencies. While automated tools can identify many changes, human judgment resolves edge cases that algorithms might miss.

iMerit’s annotators undergo thorough training in perception challenges for autonomous systems. Domain experts review ambiguous scenarios to confirm whether detected map-perception discrepancies represent genuine map errors or perception failures.

High-Precision Semantic Labeling

High-precision semantic labeling ensures that every update meets the strict requirements of autonomous driving and Physical AI systems. iMerit’s team of specialists provides detailed annotations across multiple sensor modalities, including:

- 2D Lane Level Annotations: Mapping lane geometry, markings, and attributes with precise detail.

- 3D Localization: Labeling semantic relationships and object segmentations in 3D space.

- Multi-Sensor Fusion: Synchronizing 2D imagery and 3D LiDAR point clouds within a common coordinate system.

Scaling the Loop

iMerit has built the infrastructure needed to scale the loop to turn raw logs into production-ready simulation assets. Mapping an entire city or a massive logistics network requires coordinating hundreds of expert annotators and managing petabytes of data. iMerit’s tiered GIS skill structure allows them to handle everything from foundational road digitization to advanced spatial analysis and transport network modeling.

For example, iMerit partnered with a U.S.-based robotics company to scale HD mapping without expanding its internal team. iMerit improved throughput with 12 tool enhancements and improved quality through city-level QA feedback, validator reviews, and tailored training.

iMerit also redesigned the QA workflow with three internal checkpoints, reduced rework, and improved first-pass acceptance. This resulted in a 50% increase in monthly output, a 20% reduction in annotation time, and a 60% reduction in QA rejection rates.

Conclusion

Autonomous and robotic systems operate in dynamic environments. And to stay ahead, teams need to shift from static maps to an evolving spatial graph that grows with the environment. A robust HD maps simulation loop is the key to that shift.

Using the differences between the map and reality, rebuilding the true scene, and feeding those insights back into the HD maps simulation, teams can avoid repeat regressions and improve over time. This process turns every perception failure into map intelligence and ensures that each error refines the digital twin.

Closed-loop map maintenance ensures that simulation environments remain as accurate and up-to-date as possible. This tight integration between perception data and HD maps infrastructure accelerates development, improves safety, and maximizes autonomy.

Looking to automate your map maintenance and simulation pipelines? Discover how iMerit’s expert-in-the-loop solutions can help.