As Large Language Models (LLMs) take center stage in enterprise AI, high-quality conversational datasets have become critical for training, fine-tuning, and evaluation. But the process of collecting, structuring, and labeling those conversations can be complex, especially when speed and accuracy are key.

Ango Hub streamlines this process, enabling teams to create, import, and annotate LLM chat assets with ease, supporting scalable workflows for high-quality model training. Whether you’re bootstrapping new conversations or bringing in pre-collected dialogue data, everything flows through a centralized, intuitive interface.

Generate Live LLM Conversations From Scratch

Need to build synthetic conversations from the ground up? Ango Hub lets you create empty conversation threads that annotators can populate in real time. This is especially useful when gathering responses for RLHF workflows, prompt testing, or custom chatbot training.

Getting Started with LLM Chat Asset Creation

To create new chat assets from scratch, platforms like Ango Hub typically offer a simple workflow:

In the setup panel, you can:

- Select a Storage Location: Choose where your chat data will be saved, either through your own cloud storage or a secure, platform-managed option like encrypted AWS S3.

- Set Number of Chats: Define how many conversation threads you want to create.

- Assign an LLM: Select which LLM model the new assets should be associated with.

- Add Metadata: Add basic details like project name or model type to keep assets organized.

This setup keeps your LLM chat assets structured, secure, and ready for annotation or training.

Each conversation is stored under: /{projectId}/conversations/{conversationId}.json.

This setup ensures your assets are organized, accessible, and production-ready.

Import Existing Conversations with One Upload

Already have chat logs from another source? Upload them directly as JSON files to quickly turn them into label-ready assets. Simply drag and drop a .json file into the LLM Asset Creator panel. The format is lightweight and easy to structure:

{

"messages": [

{

"id": "1",

"content": "What are your business hours?",

"user": {

"role": "user",

"id": "jane@example.com"

}

},

{

"id": "2",

"content": "We're open 9 AM to 6 PM, Monday to Friday.",

"user": {

"role": "bot",

"id": "supportbot"

}

}

]

}

Requirements:

- Each message must have a unique, sequential id

- The role should be either user or bot

- You can customize the user.id field, however you like

This ensures your LLM chat assets are not only structured but immediately ready for annotation, QA, and downstream integration into model pipelines.

Why Chat Asset Management Matters

LLMs are only as good as the conversations they learn from. With the growing demand for instruction-tuned and feedback-refined models, the ability to manage chat data across multiple sources, formats, and use cases becomes critical. From research teams working on RLHF to enterprises building domain-specific chatbots, managing LLM data pipelines at scale requires the right tooling. That’s where Ango Hub comes in.

Managing and Scaling LLM Chat Assets with Ango Hub

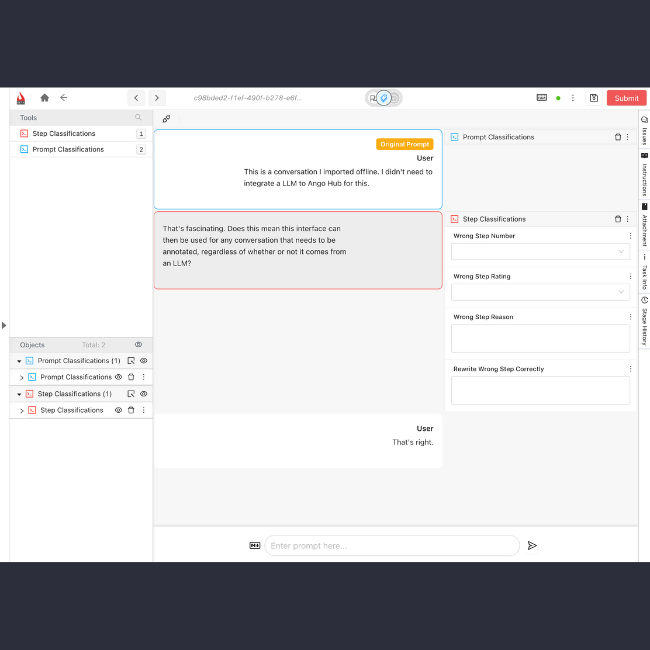

Ango Hub brings structure, transparency, and scalability to the entire LLM chat annotation workflow.

With purpose-built tools like the LLM Asset Creator and LLM Chat Labeling Editor, users can:

- Seamlessly upload or generate conversations

- Assign models and metadata to each asset

- Annotate at the message level with precision

- Collaborate across teams with detailed versioning and QA

Designed for enterprise-grade AI development, Ango Hub bridges the gap between human-in-the-loop annotation and LLM-driven automation. Whether you’re curating high-quality feedback loops, labeling model responses for fine-tuning, or building red-teaming datasets for safety testing, Ango Hub gives your team the control and flexibility to move faster, smarter.

Conclusion

LLM development doesn’t stop at generating responses; it depends on the ability to iterate, audit, and align those responses with real-world expectations. That work starts with the data.

Ango Hub empowers teams to take charge of their LLM workflows by making LLM chat asset creation and annotation intuitive, scalable, and precise. Whether you’re generating synthetic dialogues or importing rich conversation history, the platform ensures every asset is ready for high-quality, human-in-the-loop feedback and downstream optimization.

As conversational AI becomes more powerful and more pervasive, Ango Hub gives you the infrastructure to build responsibly, align intelligently, and deploy with confidence.